Deckchairs on the Titanic has been tested for balance using AI. We sat down for a conversation with Dan Kent, developer of the AI used to test the game to discuss how this worked, and what possibilities AI holds for board game development.

Tom: Why did you suggest AI testing when you played Deckchairs on the Titanic?

Dan: When you look at a Deckchairs on the Titanic game board, you might ask, “How do I know if this is fair to all players”?

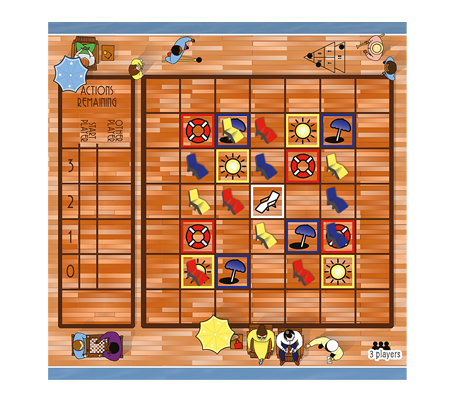

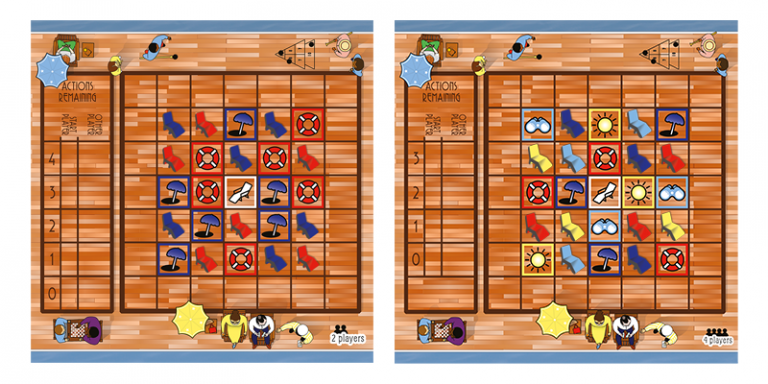

If we look at this board setup we can guess that it’s a very advantageous setup for the red player. Blue starts with all their deckchairs at the edge of the board. Their scoring spaces are far away from the most valuable centre square and on the opposite side of the board. As humans we can look at this and see that it’s unfair, and the AI agrees – red wins 99.95% of the time and quite frankly I have no idea how blue won any at all.

But what about these boards? You can see the symmetry in the 2 and 4 player boards and feel that those are balanced, but 3 players can’t go symmetrically on a square board. So the 3 player boards look strange, and trying boards which appeared to have some symmetry resulted in unfair boards. Through trial and error we were able to find combinations of boards that allowed fair play with all players winning 32-34% of the time.

How did you approach the creation of the AI?

Firstly, there is some debate about what ‘counts’ as AI. The techniques I used to run tests on the Deckchairs rule set were by no means cutting-edge, but very much fall within the subject of AI in academia. In the context of games, anything that has a computer play a game is seeking to replicate an aspect of human intelligence and so is artificial intelligence in some sense. Therefore, I think the term is appropriate.

The overall approach I used was similar to that employed by chess engines. [Deckchairs] lends itself well to this approach because it shares some things in common with chess, even though it is a very different game. While there is a random element to the game, most moves are deterministic and players have perfect information about the current state of the game.

Essentially, I expressed the rules of the game in terms of:

1) The starting game state

2) What decisions a player can make at each phase of the game

3) The effects of those decisions on the game state

4) How to calculate the value of a game state to a player (the score)

Once I had these in code, it was easy to have a computer play games of Deckchairs against itself, selecting moves and logging the results of games. Initially, I had the computer choose moves completely at random, acting like the worst possible player of the game. While the results of games played by such bad players are not particularly interesting, these simulations are very fast to run and provide a good baseline for how balanced a starting setup is.

The next step was to have the computer play a bit more intelligently, choosing sensible moves rather than random ones. Doing this meant having the program explore different possible move options. Each move evaluated would result in adding a new game state to a branching tree of states. If the program just tested each move immediately available to it, this tree would be just one layer deep. This is not a very effective approach for Deckchairs as soon as the Ship Movement cards come into play. Using this approach is like a human player who does not think ahead to the ship movement at the end of the round, meaning their deckchairs are moved off the scoring spaces they put them on.

In order to play at a level approaching that of a human, the program would need to look ahead at least as far as the end of each round, when ship movement happens and the score is calculated. This creates a problem – the game has so many possible moves at each phase that the tree of possible game states very quickly becomes impractical to exhaustively search. Simple games like noughts and crosses or even draughts can be ‘solved’ by evaluating every single possible game state by brute force; but more sophisticated games require more sophisticated approaches.

Here I again took inspiration from chess engines by employing Monte Carlo Tree Search (MCTS). As the name implies, this technique has an element of randomness to it. The idea is that the engine runs a series of imaginary games. To start with, it chooses moves randomly, but it remembers the values of the eventual game states reached through each move and, in later iterations, uses those values to weigh the likelihood of choosing each move. That means that more promising branches of the tree are explored more than those which score poorly.

A nice feature of MCTS is that you can tune the quality of the player by changing the number of iterations it performs before committing to a move. At the extreme low end, it acts just like the random player described above. Once many thousands of iterations are applied, it can find very good scoring strategies. This provides the opportunity to examine game balance for different levels of player – some starting setups might be balances for beginners but have imbalances that can be exploited by more experienced players.

For these tests, I kept things simple by having all of the virtual players play at the same level as each other and with the same goal (to win the game). It would be quite possible to run additional tests of what happens when players of different ability come up against each other or when players are not solely motivated by a binary win/don’t win goal. Different tactics may become relevant if a player is trying to maximise their own score, or the difference between their score and their opponent’s.

If we have AI playing against itself then those are equal and all you are left with is the boards as variables.

How true is this position? If the AI chooses randomly then is it testing the board state at all?

That’s true but it can still tell you some things – for players with literally no skill, does that board favour one player or another? If you created a massively unbalanced board then even random players would win if the board was in their favour just through sheer luck. Plus the fact that it’s quick to play through a massive number of games in a small amount of time. And I think in the future if we do any of this kind of AI testing, even though initially I only coded the random ones to test the engine out, I would actually use it to create baselines. It’s interesting to compare results for a board with random players versus results of a board where the players are MCTS bots with a high number of iterations to select their moves, because at that point the bot could play like a very, very good human player. In fact, given enough processing I would be surprised if you couldn’t get it to beat any human player – at least until the point where chess is where players develop deep strategies and humans might find ways to beat the AI bots. This is why the best chess AIs use additional techniques on top of MCTS. But for a game like Deckchairs you could set the MCTS to enough moves and it would be very difficult to beat the computer. It is a game that lends itself quite well to that approach, because planning ahead and looking at the different permutations of what you do and what your opponents do, is a process that works.

So if you throw enough processing power at it, it’ll be able to try out all the various possibilities and the Monte Carlo approach means it’ll spend its time exploring the branches that are most profitable for it –finding the combinations of moves that both score itself points and also rob points off opposition. The Monte Carlo approach is very good at searching through possibilities to find these moves if you give it enough iterations to play with. Where it’s bad is where there are lots of random elements, but once you give it 10,000 iterations to play with, the first 1,000 might be quite random but it will quickly zone in on where the best moves are and follow those lines down the tree.

I’m just thinking about some of the things that made Deckchairs particularly suitable for this is that you’ve got perfect knowledge of the board state – there’s no hidden information at any point of the game. There’s a small amount of randomness in terms of which Ship Movement cards come up and what order they are in but that’s it. Those random elements will make the game harder to do through an MCTS.

Essentially what those random elements do is add another set of nodes in the tree, so they increase the branching factor. With Deckchairs, the randomness comes at the point where the card is turned over – once the card is revealed, it becomes part of the state of the game, part of the perfect information players have. The imperfect information is about the cards which are going to be turned over later. So that point of randomness gives you 8 possibilities (because you start off with the No Movement card in every game) so that gives you branches, so when the MCTS gets to that point, there are 8 branches that it has to take. So it means that there are more positions to be evaluated because it has to consider each of those possibilities. But in the testing I did, it didn’t affect the testing very much.

I think for most games there is a kind of trade off in terms of processing time to evaluate moves, versus the value you get from thinking that far ahead. I found with the tests on Deckchairs there’s almost no point in looking further ahead than the end of the current round. There’s potentially some benefits to be had by positioning yourself for the next round, but it’s much more important to maximise value from the current round. When I had the AI looking a lot further ahead, it didn’t gain that much benefit from looking further ahead. It was pretty useless when it didn’t look ahead at all, but the biggest benefit came from when I let it look ahead to the end of the current round.

That actually lines up with some tactical advice that I give to people: that sometimes in the game you get to the last move in the round and you can’t improve your position or hurt anyone else. But what you might be able to do is look ahead at what’s coming up the next round and get one of your deckchairs so it’s already in a good position for the next round.

And that’s what I saw the AI doing, that most of the time it would not look beyond this round but occasionally it would take advantage of a position like that.

Thinking ahead to our next game, Sandcastle Scramble – one of the complications there is that you’ve got a hand of cards so (a) you’ve got hidden information in terms of what people have in their hands, and (b) you don’t know what’s in the deck. So I guess if you’ve got 50 cards in the deck, you’ve got 50 possibilities minus the cards that are out. So it’s just a bigger number for the AI to handle isn’t it?

Yes, the branching factor will be bigger which makes it more difficult, but you can also use “heuristics” that can be used by instead of letting the AI work it out for itself, you actually tell it that there are certain kinds of moves that are just not sensible at all, so it shouldn’t even bother considering them.

You could attack your own sandcastle, I think, by the rules of the game.

So rather than having the AI evaluate what happens if it attacks positions on its own castle and goes off down all those branches that definitely won’t bear any fruit, you can just give the AI a heuristic that says “don’t consider these moves” even though they are legal moves, and lets you focus on the moves that are all worthwhile.

One of the balancing jobs we’ll need to do on that is to make sure all the weapons are balanced properly. So how would you apply an AI to look at that?

You could set it up. When you are testing you can set up the game so it is rigged essentially, so one player always gets one weapon and another player gets another weapon and they play them against each other to see if weapon A always wins against weapon B.

Now we get to talk about all the different types of ‘pair-wise matching ranked’ methodology. This means you get every weapon to play off against every other weapon and you see which is the best by which gets most wins.

Yes, and you can also look for rock-paper-scissors type situations

Could you use an AI to actually engineer those situations? Could you run the AI and then you don’t have an RPS situation but you want one, could you use the AI to make suggested adjustments?

That’s definitely an interesting idea, what characteristics would a set of weapons need to have to produce that ring characteristic? I’d have to have a think about how that could work. You could definitely do it through trial and error – try some adjustments and then have the AI play through thousands of games to see what happens. I’d actually give it a subset of the game to play – so you would start with some castle already built and weapons in place, and play from that point on.

Just thinking about other games, would you do a similar kind of process for an asymmetrical faction game, something like cosmic encounter, in terms of playing the factions off 1vs1?

I think that would be the kind of thing where you’d have to split things; so you’d set a script up so that you’d set up a particular set of factions. Say it was going to be a four-player game, you’d run through combinations of four factions. You’d probably have to run it for days to play through all the possibilities, but you could have the script run so you’d just leave it to go. Then at the end of it you’d get a set of results to tell you which factions won most games but also tell you how did faction A do when faction B was in the game and things like that.

Time is the problem here. You would need a lot of processing power to reduce the time you need to play the games. I think that’s the end game here, that you set up a system where you have a service online, where people code up the rules of their game in a particular language, upload it to the service which runs it on some super fast cloud instances and then spits back the results.

Here’s a question for you Dan, I know Weightlifting House has taken off so you don’t have time for this but is this a potential business for someone?

I think so yes, I’d love to be involved in some kind of advisory capacity. I think the way that the board game industry has grown means that services for game developers is going to be a growth area. Board games are a competitive market so anything that can help produce better games more quickly would be something that people will be prepared to pay for.

Everyone knows that testing a game is a big pain point.

There’s certain types of games where you have to play test them loads and loads and loads, because stuff comes out in combinations. Like Magic: The Gathering we go right back to the start with the Black Lotus card which was horrendously overpowered because in combination with other things you can have a first time – first hand – win, and you only pick that up through playtesting. And a lot of that is just about sample size so you’ve go to play through a lot of games to find that edge case that breaks your game.

That’s a perfect scenario for a long run of computer tests doing thousands and thousands of playthroughs and spitting back saying we’ve found these set of rules that come together and means this player becomes infinitely powerful.

So in an RPG game you could look for specific combinations that mean that players can do more than X damage with one hit?

Yes, or in a game with player elimination you could set it to look for things like what is the earliest a player gets eliminated, what is the average and distribution? So we might find that players can get knocked out in round 2 of a 10-round game and that’s not good, but if we tweak a rule then we can change so that so nobody gets knocked out until round 8 or 9, which is much better.

I think the great thing is that it doesn’t have to be a custom system for every game. The way MCTS works is it is a very generic algorithm. The only thing you need to do for each game is express the rules of the game in a way that allows the MCTS to apply them to the game state. Anything else that happens after that is just a standard set of algorithms that run and get you the results. The difficult bit – the bit you need a programmer for – is expressing the game rules in program language.

I think that’s a useful process to happen anyway, because if you can’t express your game rules in a programming language, then that’s an indication that something’s wrong. If your rules are ambiguous to the point that they can’t be expressed, then that probably indicates that you need to do something to remove grey areas.

Definitely. If there’s one thing a computer is good it, it’s logic, and if there’s one thing you want rules to be it’s straight up logical. So I can see how that in itself is a nice test for your rules.

The other kinds of things you’ve talked about AI being useful for, I really like the one about using AI to test how many tokens and things you need.

Yep, you can set it to say that in 100,000 games we played, 99% of games needed this number of tokens, but in 1% of games we exceeded that by this much. So then it’s the designer’s choice as to what tolerance they are happy with.

And the situation we’ve discussed before but you haven’t got here is similar to the player elimination which is something like an engine building game, where you want to stop the game at the point where someone has clearly won. Finding out what the trigger point for that is, whether it’s a number of rounds or a player achieves a certain state of play that means they will definitely win but it’s going to take another 5 or 10 rounds to reach the number of points you’ve arbitrarily decided, that can show up in AI.

So it seems to me like there’s huge scope to use AI in development.

If the core engine was a standardised thing, then all someone had to do would be code up their game, or pay someone to code up their game, in a language that engine understands; then as a designer you wouldn’t have to build a whole piece of software. Depending on the language used it might be possible for designers to learn it to the extent of being able to express their game in that language like scripting in Tabletop Simulator

Thanks Dan, it seems there are lots of possibilities for using AI in future development and we’re definitely interested in continuing to explore this area.

Additional edit: After posting this on Reddit, the developer of Boardgame Lab posted to let us know that they have a standardised platform for AI testing in development – this looks like a great opportunity for designers and publishers to utilise and is open for designers to sign up to preview the tools.

If you’re interested in the software side of this, all our coding is open source and you can find it on our GitHub.

Are you a designer who has used AI to test or develop their game? Have you played games which you think could have used more testing and does it sound like AI could solve the problem? Let us know in the comments below.